An issue with both physical and virtual environments is unused resources, particularly memory and storage. If you allocate 250GB to a VM, and it’s only using 50GB of it, that’s a lot of wasted disk. Multiply that by dozens of VMs and you’ve got a lot of storage not being used. The good news is that modern hypervisors offer “thin-provisioned” virtual disks: thin-provisioning means that, even if you set the disk size to 250GB, if the OS is only using 50GB of it, you’re truly only using 50GB of it at the physical layer. Which means that you can “over-subscribe” your storage by allocating more of it in virtual disks than you actually have, and then the VMs can grab some of that additional 200GB if they need it for something, and then when they’re done with it, the hypervisor takes it back. You don’t need to tightly monitor each VM’s utilization; you just give it as much as it’s likely to need for regular operations, and just monitor the overall physical storage utilization of your environment.

The problem is that, if you over-subscribe your storage (i.e., allocate 15TB of “thin-provisioned” storage to VMs in a physical environment containing only 10TB of storage), and your VMs do use it all up at once, you’re in trouble. So generally you don’t want to oversubscribe by more than, say, 25%, depending on how much your VM storage requirements fluctuate.

Another issue that I discovered recently relates to how VMware recovers unused space from the VMs, and how Red Hat Enterprise Linux communicates to VMware that virtual storage is no longer needed, particularly in an environment with Nutanix infrastructure.

A short primer on Nutanix, if you aren’t familiar with it: you take a number of physical hosts and combine them into a cluster, combining the storage into a large pool. The VMs can live on any host in the cluster, using the CPU and memory resources available there, and their data is spread throughout the cluster and replicated for reliability. If a host fails, the VMs get restarted on other hosts, and any datablocks that were living on that host are restored from the copies available on other hosts. You can use a number of different hypervisors; if, for example, you want to use VMware, the storage containers created at the Nutanix level are presented to all the cluster hypervisors as regular NFS storage.

I support a Nutanix cluster that is dedicated to a large application with a lot of data constantly being written and cleaned up, several hundred GB every day. The cluster has a total of about 290TB of storage; with a “replication factor” of 2 (each block has a copy on another host in the cluster), that gives us about 145TB of “logical” space. We have about 15 very large VMs, each of which has a bit over 8TB of disk attached, along with some other smaller VMs, totalling about 114TB of storage, all thin-provisioned. We also use “protection domains” to create and replicate snapshots of the VMs to send to a “backup” cluster in another data center, and the delta disks for those snapshots take up several additional TB in storage.

I started getting alerts that we were using more than 75% of the available storage in the cluster, and it continued to grow, approaching 90%. Nutanix requires a certain amount of free space in order to operate, an in order to survive a host failure, since a host failure obviously reduces the overall available storage. If we went much beyond 90%, the cluster would begin to have lots of difficult to resolve issues (VMs freezing, filesystems becoming “read-only”, etc.). But wait…the VMs are only using about 35% of their virtual disks! Why is Nutanix saying we’re using almost 90%?

I took a look at our vSphere client, and it reported similar numbers to Nutanix: each VM was using 7+ TB of its 8TB storage. How was this possible?

We initially thought that the issue was with the protection domain snapshots. The way that snapshots work in Nutanix is similar to the way they work in VMware; when a snapshot is created, new data being written goes to “delta” disk files, and the original disk files are left quiesced so that they can be safely copied somewhere else, or restored in the event of a failure. Eventually, when the snapshot is deleted, the delta disk files get merged back into the main disk files. We had recently enabled protection domains for all the VMs, and the storage had been growing ever since, so it seemed a likely cause. Was Nutanix simply failing to clean up delta disks, and they were just growing and growing as time went on from the creation of the initial snapshots? The fact that the “.snapshots” directory within the datastore seemed to be using a remarkable 126TB of storage seemed to bear this out.

We called Nutanix, who explained that no, the .snapshot directory wasn’t actually using that much storage, or at least, not exclusive of the actual data disks. The data disks were stored there, and the files that appeared to be data disks in the usual VM directories were just pointing to them. So that turned out to be a red herring.

The actual issue seems to be that the operating system on the VMs, in this case Red Hat Enterprise Linux 6 (RHEL6), and VMware, don’t communicate very well about what’s actual reclaimable space. When a file gets deleted in RHEL6, the data doesn’t actually get cleaned up right away; what gets removed is the “inode,” essentially a pointer that describes where on the disk to find the data. The data itself doesn’t get removed; it just gets overwritten later on by new data as needed. Over time, as the application wrote many GB a day to its filesystems, that data continued to stay on the disks, even though the inodes pointing to the data had been deleted, and the OS reported that the space was available. VMware saw all those ones and zeroes and assumed that this was actual live data that could not be cleaned up by the hypervisor processes that maintain the thin-provisioning.

In short, in an environment like this with lots and lots of data coming in and getting “deleted,” you can’t actually oversubscribe the storage because the OS and VMware don’t talk to each other very well about what data is actually needed.

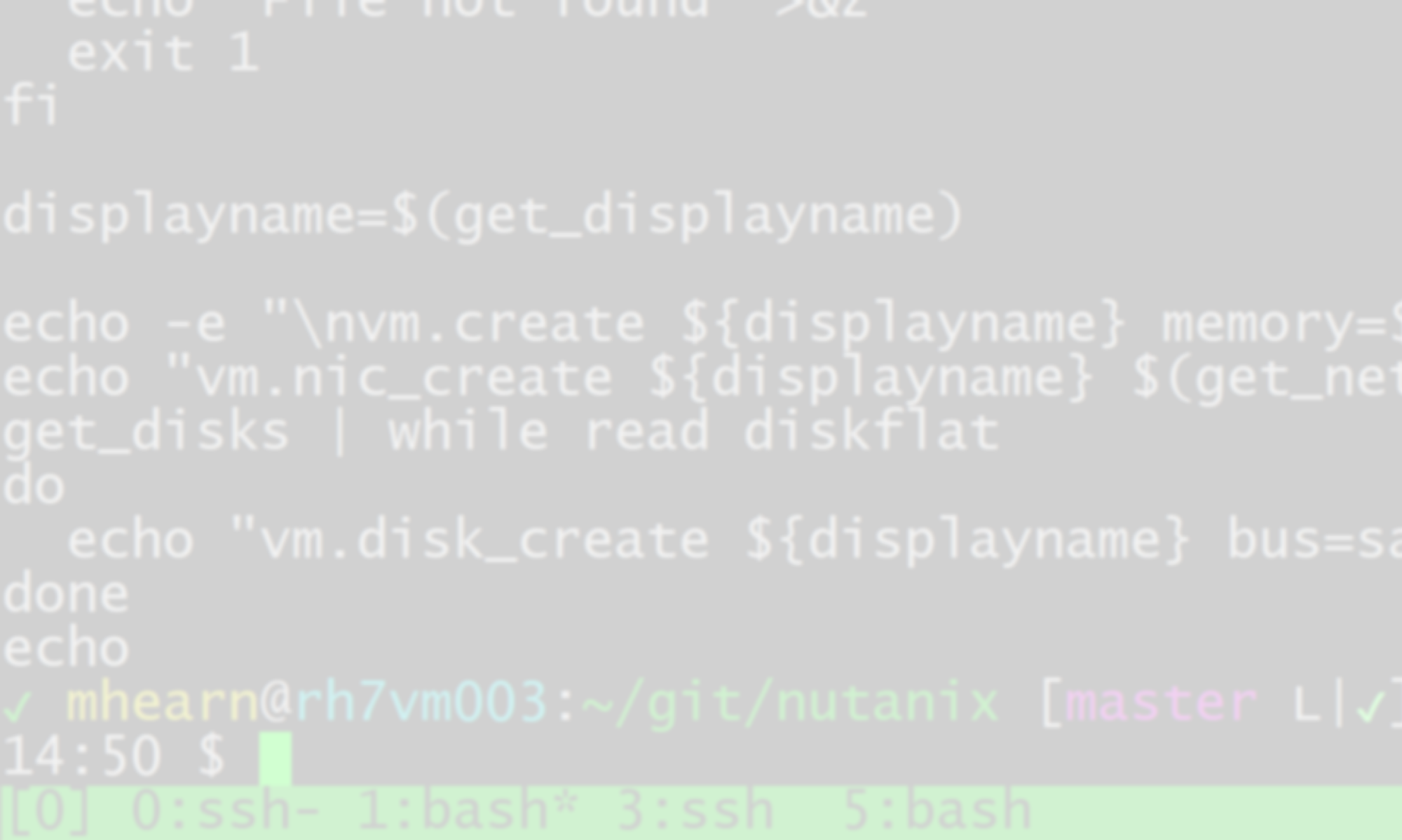

The fun part was the trick I did in the short term to clean things up until I could replace the virtual disks with smaller ones and “de-over-subscribe” the storage. It turns out if you write a very large file to the filesystem containing nothing but zeroes, and then delete it, VMware will see that as reclaimable space. It took a few days to run on all the filesystems in the cluster, but I used the “dd” command to output from /dev/zero to a file in the directory, and then removed it; VMware would eventually knock back the reported storage utilization, and Nutanix’s “Curator” scans would reclaim the space as well.

dd if=/dev/zero of=/datastore01/file_full_of_zeroes bs=1M count=1000000 rm /datastore01/file_full_of_zeroes

The first command creates a 1TB file containing nothing but zeroes; the second removes it. Doing that to each of 4 datastores on each VM got the reported utilization from 7TB to 4TB on each VM, and the Nutanix-reported utilization back under 60%. Some information I googled up seemed to indicate that operations might be necessary on the virtual disks from within the hypervisor, or possibly having to do a storage migration to get VMware to reclaim the space, but it did it automatically (we’re running ESX 6.0.0, which may have different space reclamation techniques than previous versions).